Each interviewer listens to each recording once and assigns a “pass” or “fail” score.

ATTRIBUTE AGREEMENT ANALYSIS MINITAB SERIES

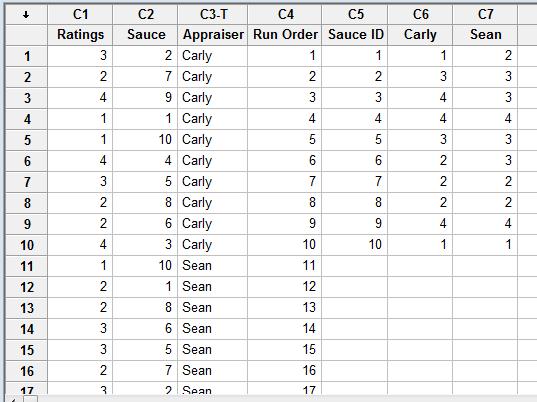

ExampleĪ large corporation is conducting a series of interviews for technical support positions and has received a large number of applications. For example, good or bad and pass or fail. Standard: A value given to an item by an expert or a panel of experts that is considered the “true value” or “true answer.”Īttribute: Non-numerical data, also known as discrete data. Usually > 80 percent is considered to be a good level of agreement. Draw your conclusions and decide on the course of actions needed if the level of agreement is below a set threshold. Analyze the results: Is there good agreement between appraisers? Each appraiser vs.

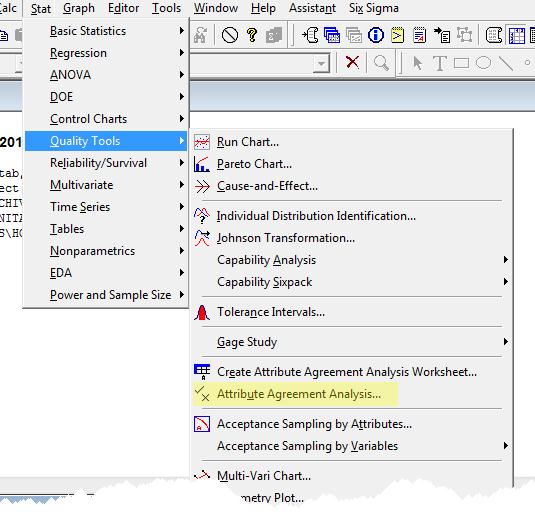

ATTRIBUTE AGREEMENT ANALYSIS MINITAB SOFTWARE

Enter the data in a statistical software package or an Excel spreadsheet already set up to analyze this type of data (built-in formula). They do not know when they are evaluating the same items and they do not know what the other assessors are doing. Conduct the assessment with the assessors in a blind environment. Have the items judged by an expert, which will be referred to as the “standard” (can be one person or a panel – see table below). Set-up a structured study where a number of items will be assessed more than once by more than one assessor.

Each psychiatrist gives one out of five diagnoses to each patient. An example of the use of Fleiss` Kappa could be this: Consider fourteen psychiatrists who are invited to examine ten patients. The p alone doesn`t tell you if the chord is good enough to have a high prediction value.

However, even if the P reaches the statistical significance level (usually less than 0.05), this only indicates that the concordance between the evaluators is significantly better than might be expected. Statistical packages can calculate a default value (Z score) for cohens Kappa or Fleiss Kappa, which can be converted to a P value. The measure calculates the degree of compliance in the classification in relation to what would be expected by chance. This contrasts with other kappas, such as Cohen`s Kappa, which only work if the concordance between no more than two evaluators or intra-counselor reliability (for an expert against himself) is evaluated. Fleiss) is a statistical measure for assessing the reliability of compliance between a fixed number of reviewers in assigning categorical ratings to a number of papers or in the classification of articles.

0 kommentar(er)

0 kommentar(er)